Until recently, SEO meant optimizing for Google’s algorithm.

You’d chase rankings, tweak metadata, and fight your way to the top of Page 1.

But Gen-AI tools are gradually changing it.

Google still is the leading power in search with 5 trillion searches in 2024. ChatGPT accounts for 5 billion search-like prompts every month. The difference is huge but a lot of your buyers are doing their research on ChatGPT than visiting tens of websites to find information about you.

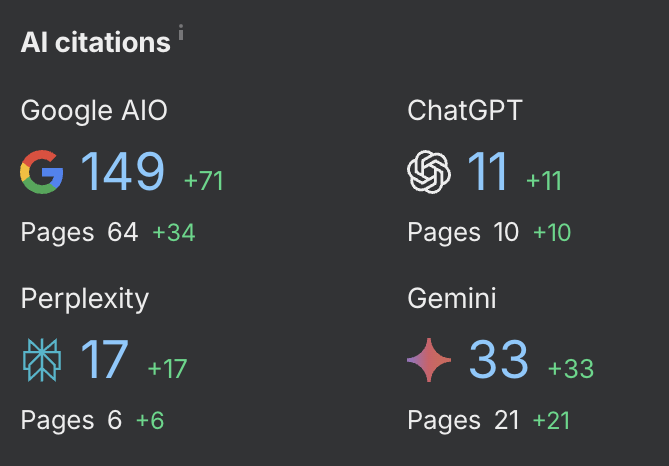

To further strengthen my point, I would add that Ahrefs recently rolled out an AI citation report, showing which websites are being cited by AI tools like ChatGPT and Perplexity.

If your site isn’t showing up there, you may not exist in your buyer’s journey at all.

To understand this shift, I ran an experiment.

I tested how 7 popular AI tools - ChatGPT, Gemini, Perplexity, Claude, Grok, Copilot, and DeepSeek handle source citations across 5 types of real-world prompts.

I wanted to find out:

- Do these tools cite sources? If so, when?

- Which tools give credit to websites—and which don’t?

- What happens when you ask for stats, definitions, product recommendations, or content?

- What should marketers and content teams do differently?

Let’s break it down.

The Hypothesis & Experiment Design

Before I get into prompt testing and citations, let’s talk about the tools themselves.

Each of these generative AI tools has a growing, loyal user base, and a role in shaping how information is discovered online.

Each tool is used differently:

- Some aim to replace search (Perplexity, Gemini)

- Some aim to embed assistance in workflows (ChatGPT, Copilot)

- Some are real-time facet checker (Grok)

- Others offer safe summarization (Claude) or local AI options (DeepSeek)

But none of them work like traditional search engines.

The Hypothesis

I noticed that AI tools don’t cite consistently. Instead, they behave based on intent of the query, their design philosophy, and perhaps even legal risk.

My core hypothesis: The way AI tools cite (or don’t cite) sources depends on the type of question you ask, not just the tool itself.

In other words:

- Some prompts trigger citations (like stats)

- Others don’t (like “write an article”)

And marketers optimizing for SEO need to understand this new intent-citation dynamic if they want to stay visible.

Experiment Setup

To test this, I ran the same five prompts across all seven tools. Each prompt reflected a different search or task intent:

- Definition → What is B2B marketing?

- Process → How to choose tools for B2B marketing?

- Product Comparison → Which tool is better: Ahrefs or Semrush?

- Statistics → How many people in B2B used generative AI?

- Task → Write me a 300-word article on B2B marketing

For each response, I recorded:

- Whether sources were cited

- How many links were included

- If links pointed to brand sites, third-party blogs, or aggregators

- Whether the tool offered clickable or just textual citations

I also took screenshots (shared below in placeholders) to show how responses varied.

Prompt 1: What is B2B Marketing?

This is the kind of question that shows up at the very top of the funnel.

If AI tools are trying to help users “understand a concept,” I expected at least some of them to cite sources, especially those that position themselves as research assistants.

Here’s what happened:

1. ChatGPT

Prompt: What is B2B Marketing?

Citation: No sources cited

Behavior: Delivered a well-structured, 3-paragraph response without any links or references.

Takeaway: Acts like a teacher, not a librarian. It’s trying to explain, not refer.

2. Gemini (Google)

Prompt: What is B2B Marketing?

Citation: Yes

Behavior: Cited multiple third-party marketing blogs and educational websites. Links appeared below the summary.

Takeaway: Surprisingly transparent—especially for a basic question. Likely pulling from indexed Google Search results.

3. Grok (X / Twitter AI)

Prompt: What is B2B Marketing?

Citation: No citations

Behavior: Delivered a short, conversational explanation. No links, footnotes, or references.

Takeaway: Grok acts like Twitter. It speaks in opinions, not footnotes.

4. Claude (Anthropic)

Prompt: What is B2B Marketing?

Citation: No sources cited

Behavior: Response felt formal and articulate, but no citation or attribution.

Takeaway: Leans toward safety and accuracy, but avoids external references.

5. Perplexity

Prompt: What is B2B Marketing?

Citation: Yes

Behavior: Cited multiple sources inline and included a “Sources” box at the bottom. Links appear on the top of output.

Takeaway: Perplexity behaves like a hybrid between search engine and AI. Best performer in this category.

6. Microsoft Copilot

Prompt: What is B2B Marketing?

Citation: No sources

Behavior: Short paragraph, no citations. No indication where the information came from.

Takeaway: Despite being backed by Bing, it doesn’t act like a search engine here.

7. DeepSeek

Prompt: What is B2B Marketing?

Citation: No sources

Behavior: Provided a paragraph-length answer with no links or citations.

Takeaway: Similar to Claude - safe, broad, and self-contained.

Here’s the quick summary of citation pattern for “definition” prompt:-

Out of seven tools, only Perplexity and Gemini cited sources for a basic definition. Everyone else treated the answer as general knowledge.

Prompt 2: How to Choose Tools for B2B Marketing?

This prompt reflects a very common real-world use case: a buyer trying to evaluate tools, build a shortlist, or understand selection criteria. I expected this question to trigger more citations than the previous definition-based prompt.

But the results were inconsistent, and surprising in some cases.

1. ChatGPT

Prompt: How to choose tools for B2B marketing?

Citation: No

Behavior: Gave a detailed, article-style answer. It outlined factors like budget, integrations, features, and scalability—but didn’t reference any external source.

Takeaway: Strong content, but no citations. Operates more like a content generator than a curator.

2. Gemini

Prompt: How to choose tools for B2B marketing?

Citation: No

Behavior: Listed out 6–7 evaluation criteria and examples of B2B tools, but did not cite or link to any sources.

Takeaway: Unlike the previous definition prompt, Gemini didn’t offer citations here. Possibly because the answer was framed as a framework rather than a fact.

3. Grok

Prompt: How to choose tools for B2B marketing?

Citation: No

Behavior: Answered with bullet points and marketing considerations. Zero links or source attribution.

Takeaway: Treats this as subjective advice rather than a data-driven question.

4. Claude

Prompt: How to choose tools for B2B marketing?

Citation: No

Behavior: Generated a thoughtful, structured response covering needs assessment, team input, and trial usage. No external references.

Takeaway: Claude is consistent. Well-articulated but internally sourced.

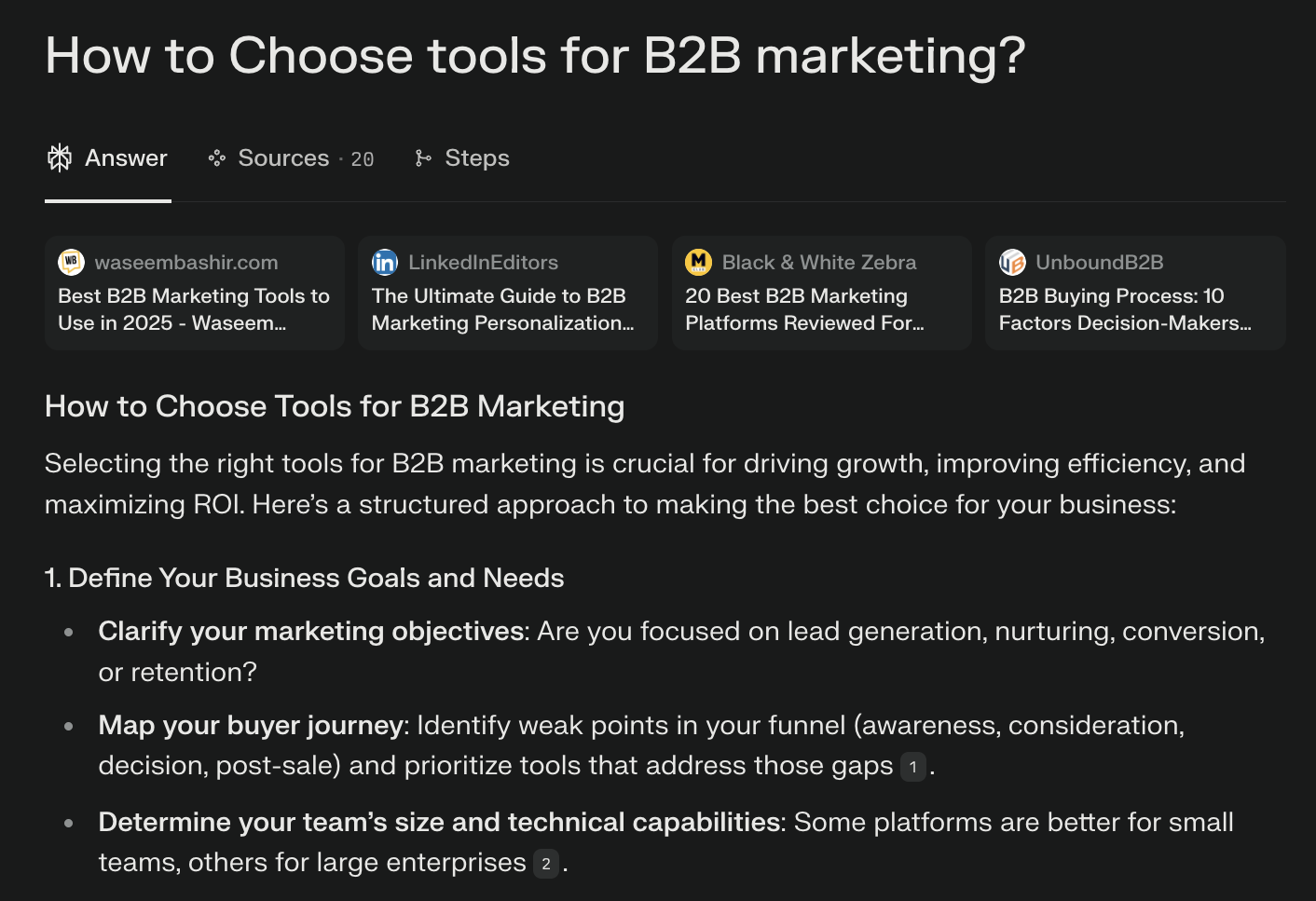

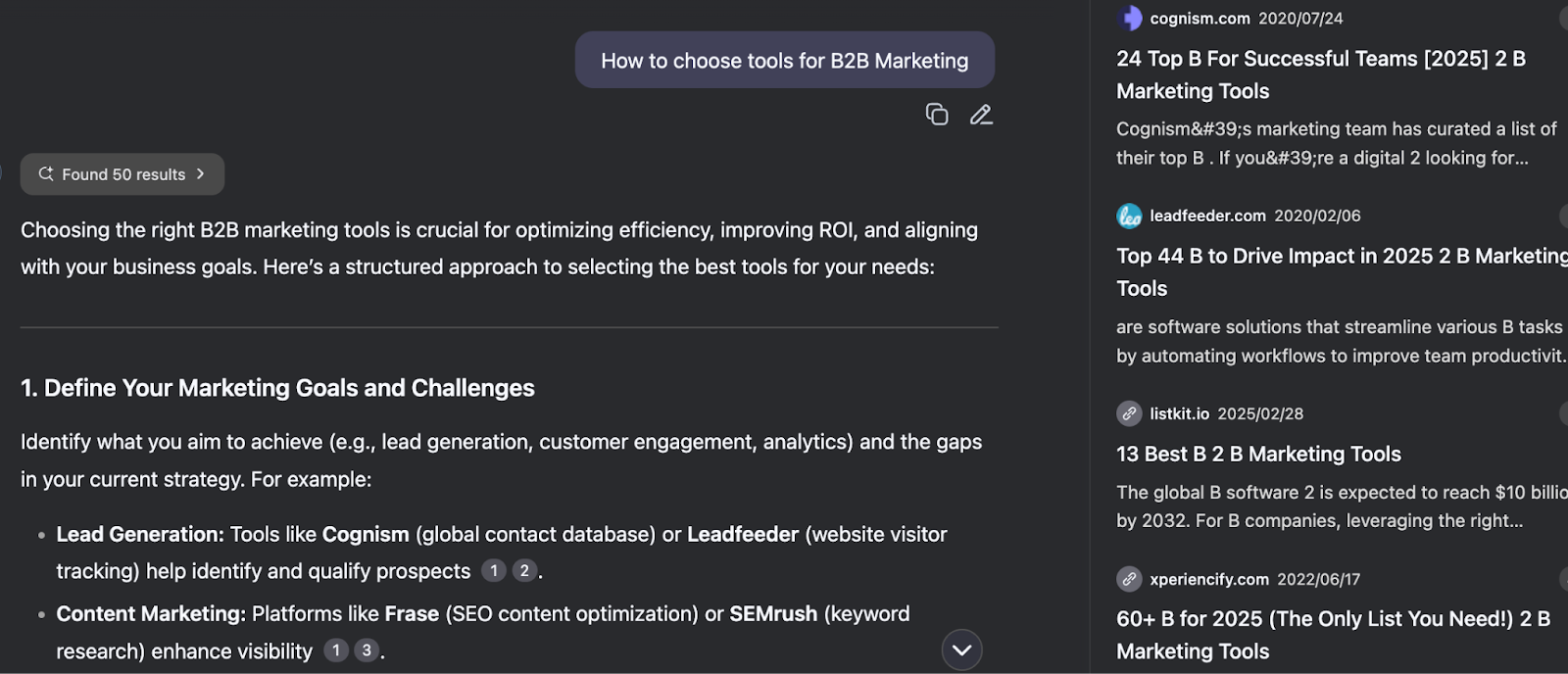

5. Perplexity

Prompt: How to choose tools for B2B marketing?

Citation: Yes

Behavior: Cited sources inline and included a reference box with multiple blog posts, comparison pages, and marketing tool roundups.

Takeaway: The only tool that treats this as a search-like query. Reads like a summary of top articles on the web.

6. Microsoft Copilot

Prompt: How to choose tools for B2B marketing?

Citation: No

Behavior: Gave a short summary with suggestions but didn’t offer any links or source context.

Takeaway: Like ChatGPT, it delivers clean advice but avoids sourcing.

7. DeepSeek

Prompt: How to choose tools for B2B marketing?

Citation: Yes

Behavior: Provided a response and listed 4–5 clickable sources on the right panel.

Takeaway: Surprisingly helpful here. DeepSeek leaned more toward research assistant than generative tool.

Here’s the quick summary of citation pattern for “process” prompt:-

Only Perplexity and DeepSeek cited sources for a process-oriented prompt. Gemini, which cited sources in the definition prompt, offered none here suggesting tool behavior may shift based on how “subjective” a query feels.

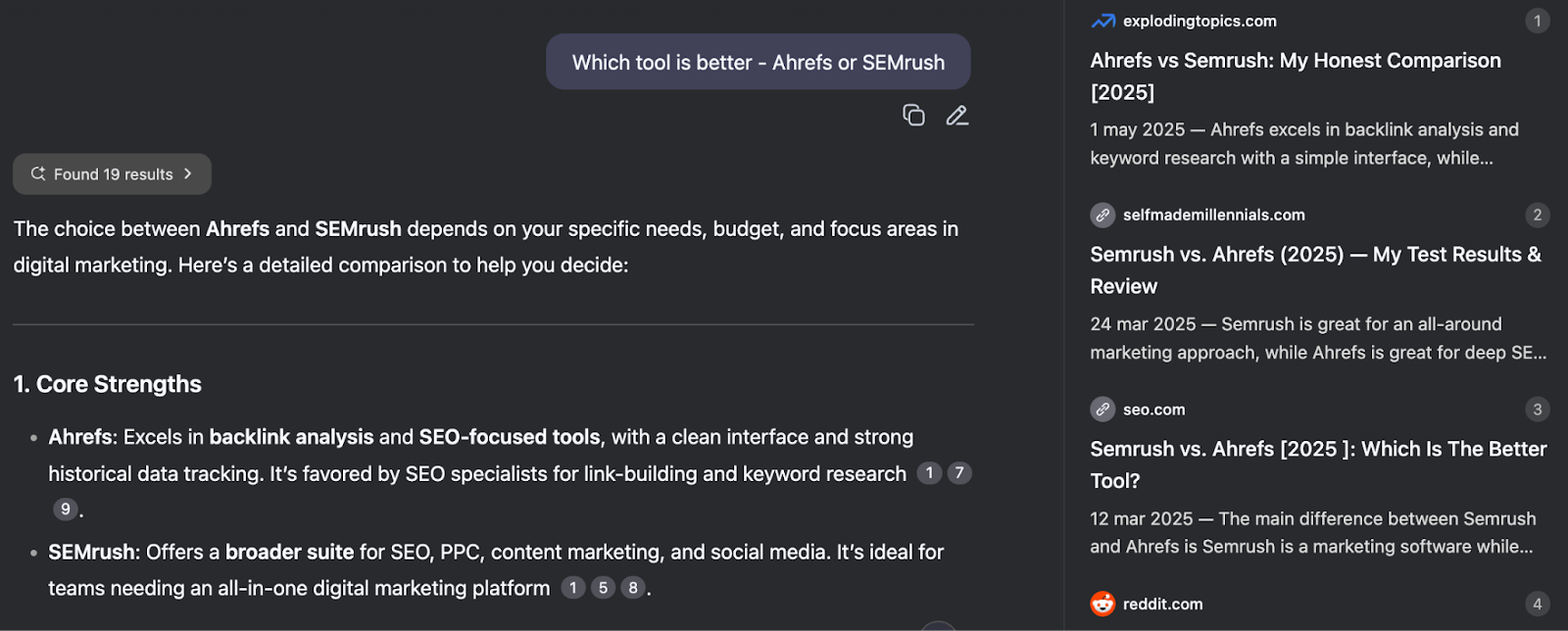

Prompt 3: Which Tool is Better - Ahrefs or Semrush?

This time, I gave the tools a more commercially sensitive prompt: a comparison between two well-known SEO platforms. The goal was to see how AI tools handle product/brand mentions.

Do they cite only brand websites? Do they reference third-party reviews? Or do they dodge citations entirely?

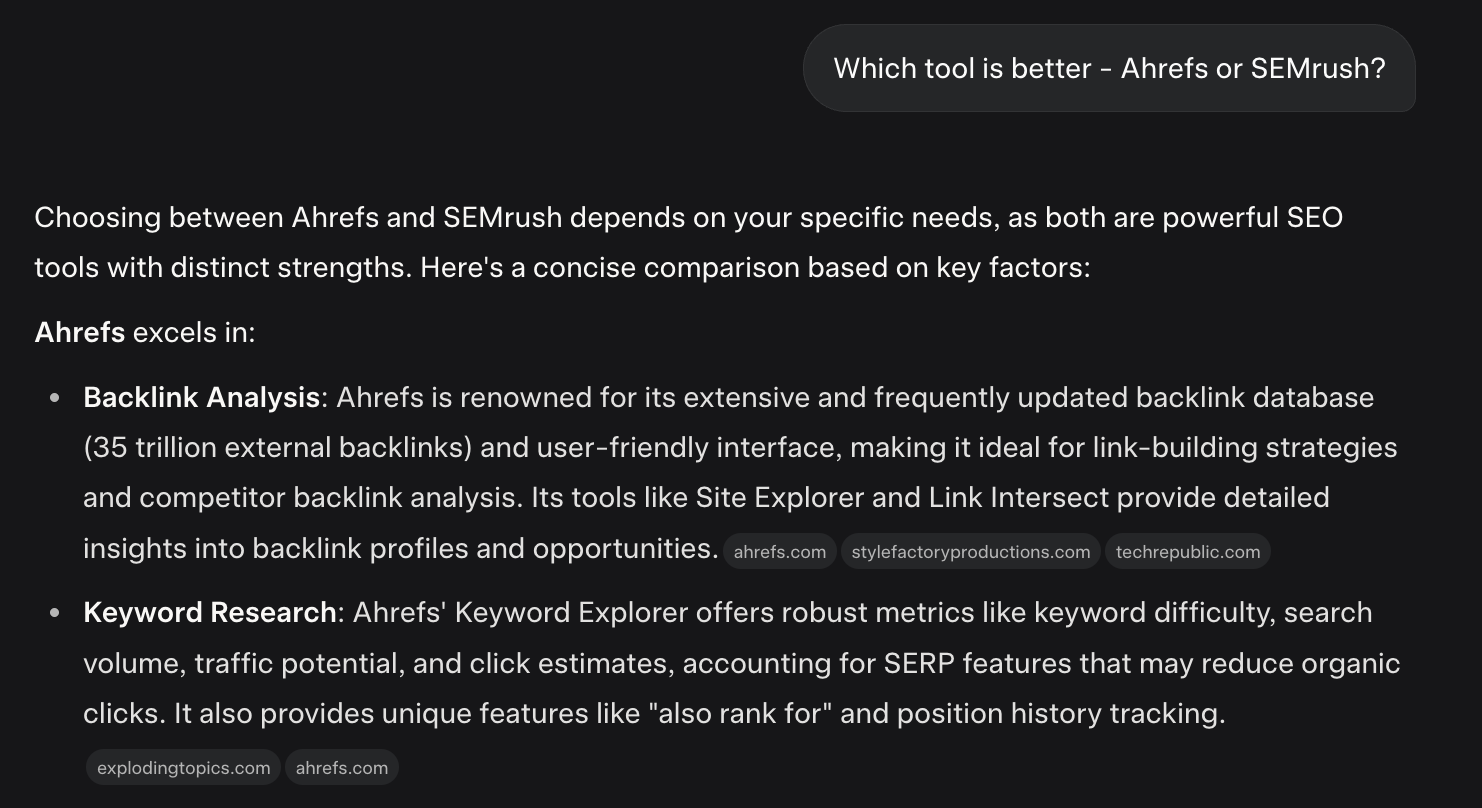

1. ChatGPT

Prompt: Which tool is better - Ahrefs or Semrush?

Citation: No

Behavior: Gave a neutral, comparative overview—discussing use cases, strengths, and features of both tools. No sources or links included.

Takeaway: A well-balanced response, but again: no citations.

2. Gemini

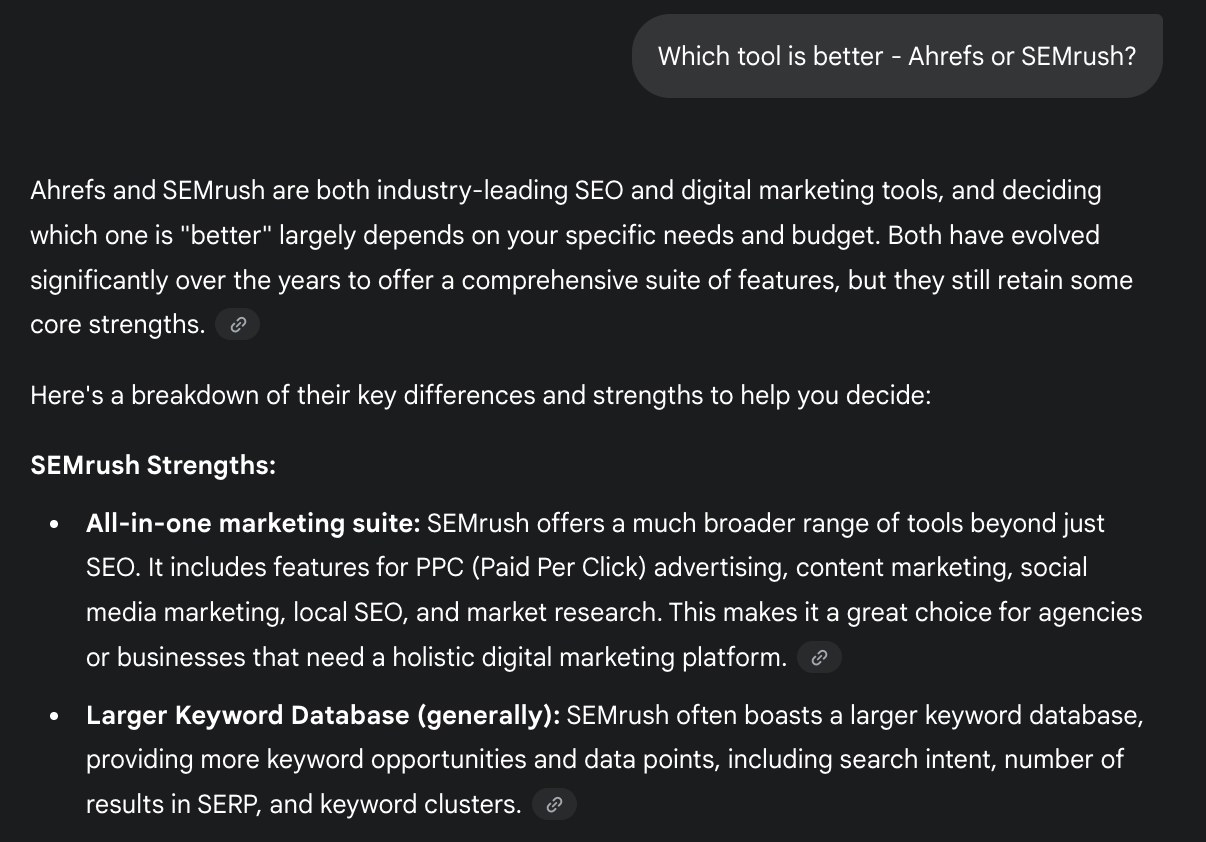

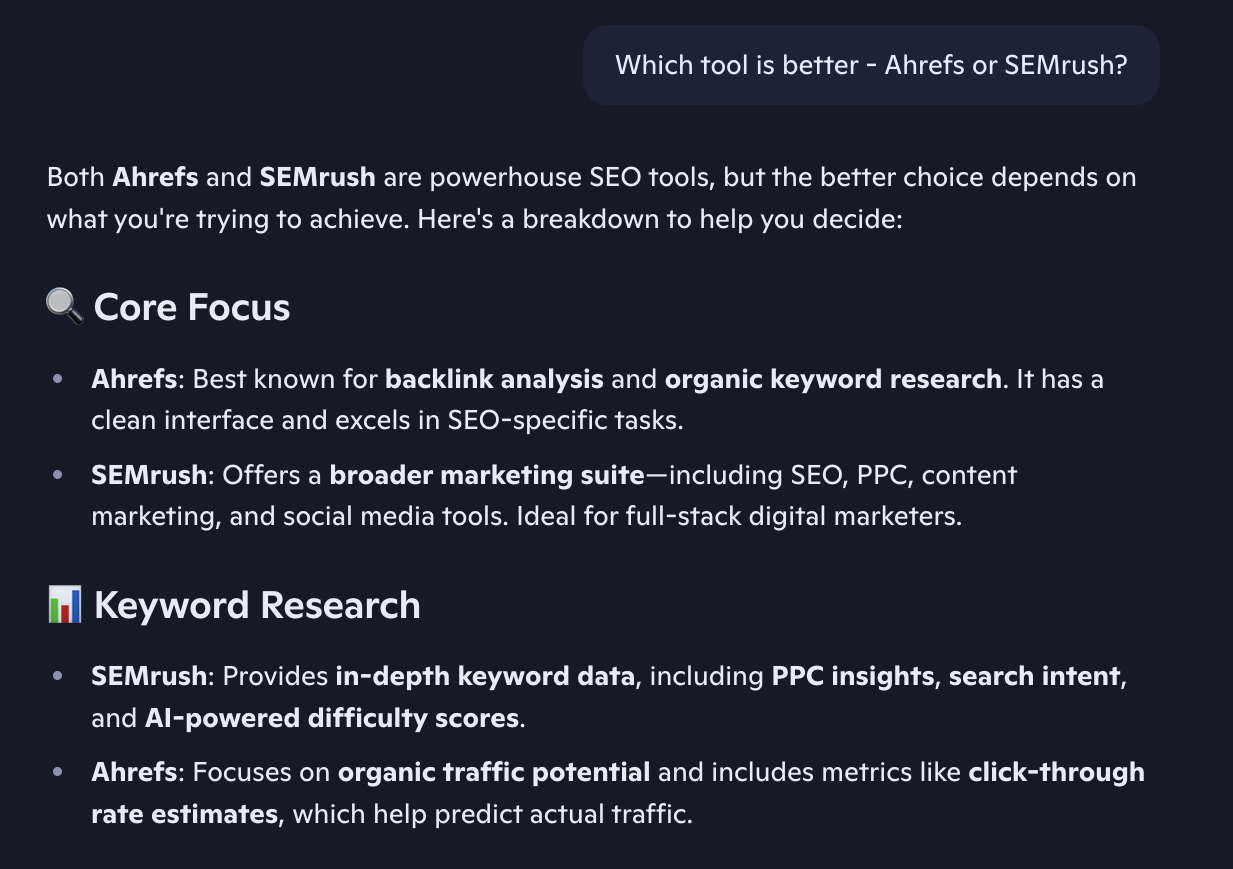

Prompt: Which tool is better - Ahrefs or Semrush?

Citation: Yes

Behavior: Cited third-party blog comparisons (like G2, Capterra, and SEO blogs). Displayed clickable links below the response.

Takeaway: Returned to citing behavior, likely because this is a branded query.

3. Grok

Prompt: Which tool is better - Ahrefs or Semrush?

Citation: Yes

Behavior: For the first time, Grok included external citations—linking to blog posts and SEO forums.

Takeaway: Grok treats product comparisons with more scrutiny than abstract prompts.

4. Claude

Prompt: Which tool is better - Ahrefs or Semrush?

Citation: Yes

Behavior: Cited multiple sources inline and explained the differences in pricing, UI, and user base.

Takeaway: Claude becomes more source-friendly when you introduce brand comparisons.

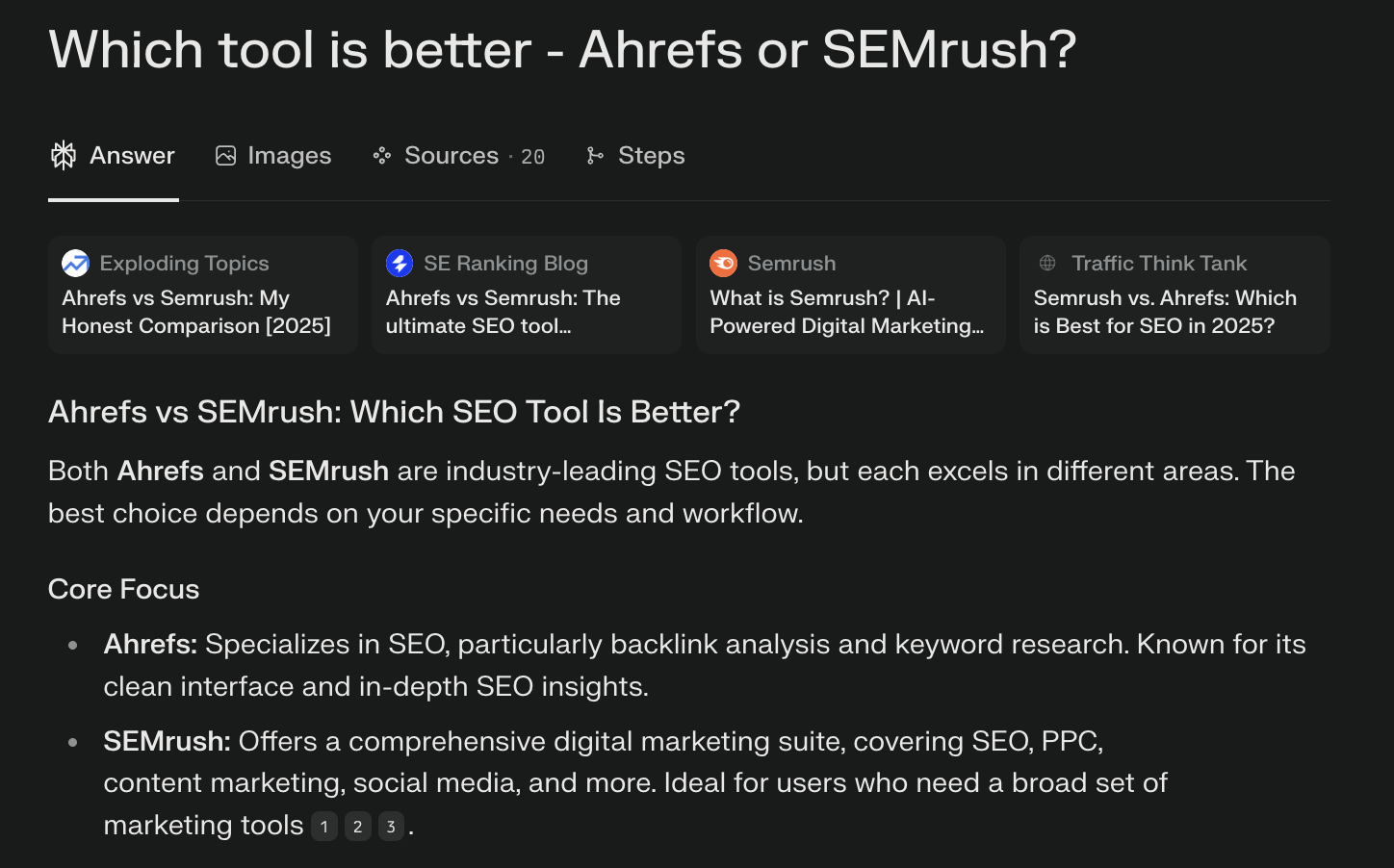

5. Perplexity

Prompt: Which tool is better - Ahrefs or Semrush?

Citation: Yes

Behavior: As expected, Perplexity pulled data from top-ranking comparison articles. Included citations both inline and in a dedicated source list.

Takeaway: Still the most consistent citation performer across all prompts.

6. Microsoft Copilot

Prompt: Which tool is better - Ahrefs or Semrush?

Citation: No

Behavior: Provided a short side-by-side description, but no links or references.

Takeaway: Copilot continues to keep sources invisible.

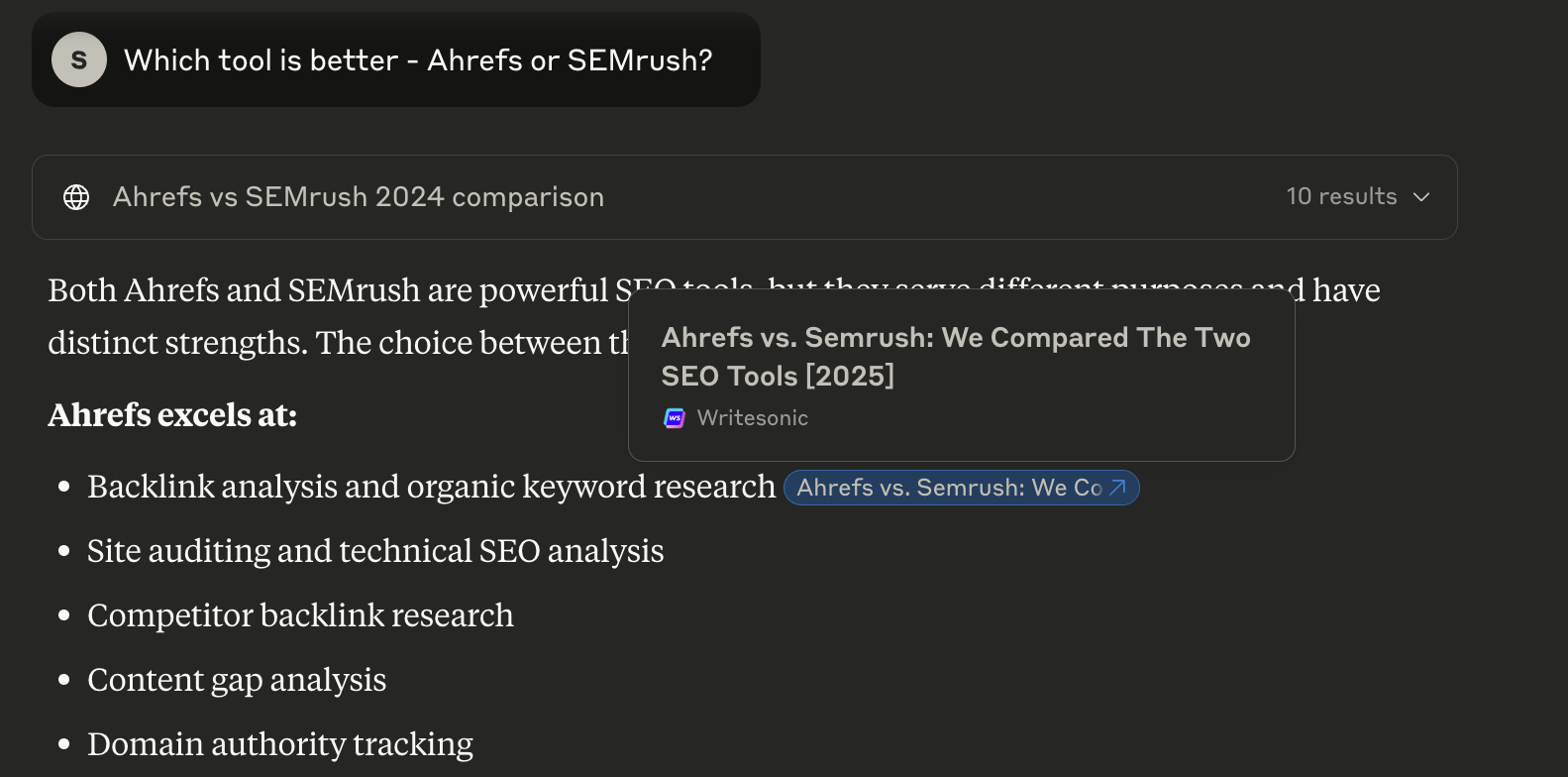

7. DeepSeek

Prompt: Which tool is better — Ahrefs or Semrush?

Citation: Yes

Behavior: Included a list of source URLs at the end, many of which were blog-based comparisons.

Takeaway: Reinforced the pattern from the previous prompt, DeepSeek favors citing on brand-specific queries.

Here’s the quick summary of citation pattern for “product comparison” prompt:-

This was the first prompt where five out of seven tools cited sources, likely because the prompt mentioned brands and implied decision-making.

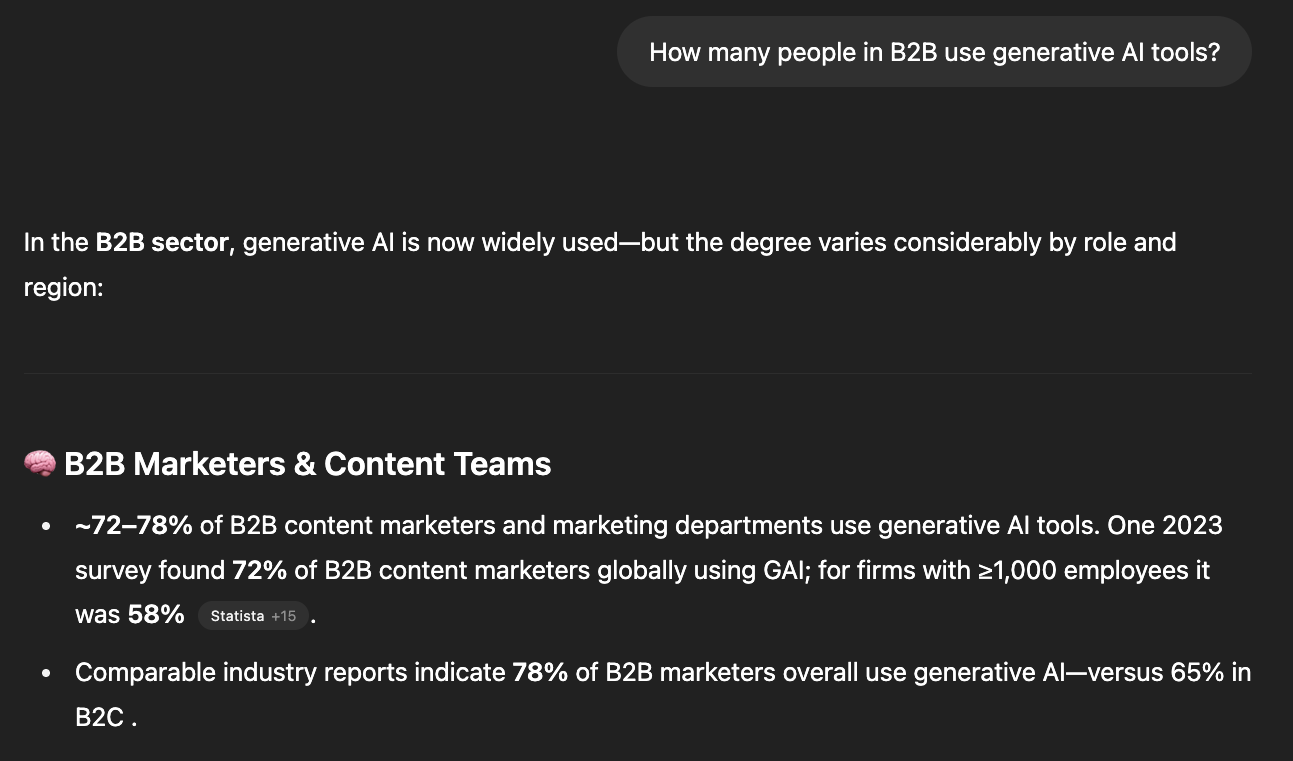

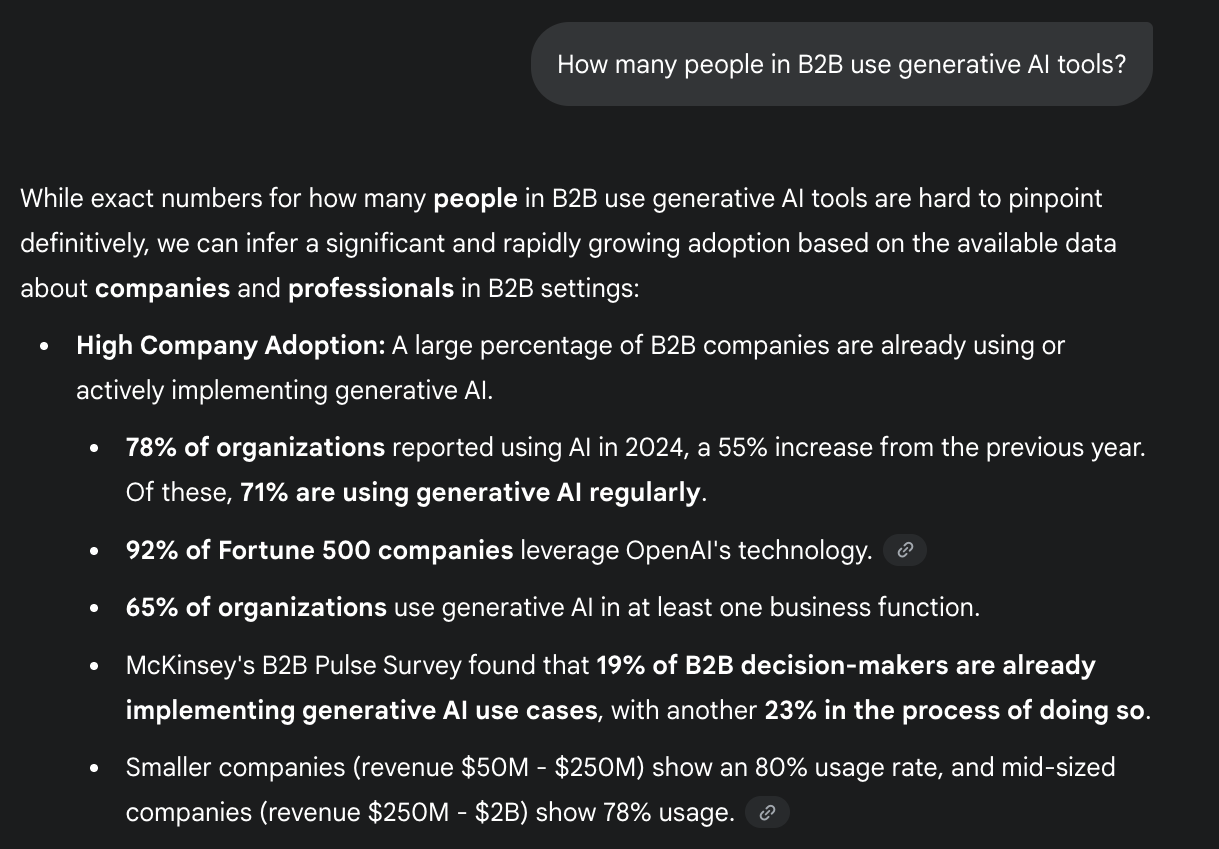

Prompt 4: How Many People in B2B Use Generative AI?

This question tests whether the tools cite sources when asked for a statistic, something that typically requires a verifiable reference.

My expectation: If any category should force AI tools to cite, it’s this one. Stats should be backed by real, timely data. Let’s see how they did.

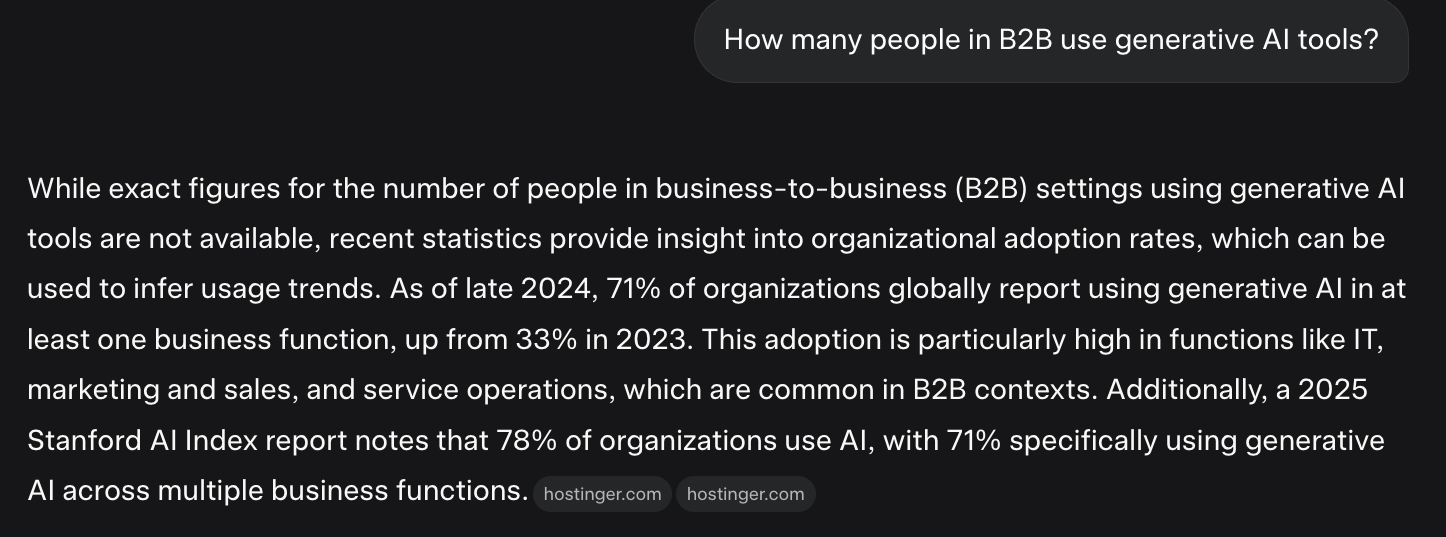

1. ChatGPT

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: This was the first prompt where ChatGPT cited sources though only after stating the number. It referenced a 2023 McKinsey study.

Takeaway: It’s willing to cite when prompted for data but even then, just barely.

2. Gemini

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: Cited data from research reports and industry articles. Sources appeared along with the main response.

Takeaway: Predictable return to citation behavior when asked for numbers.

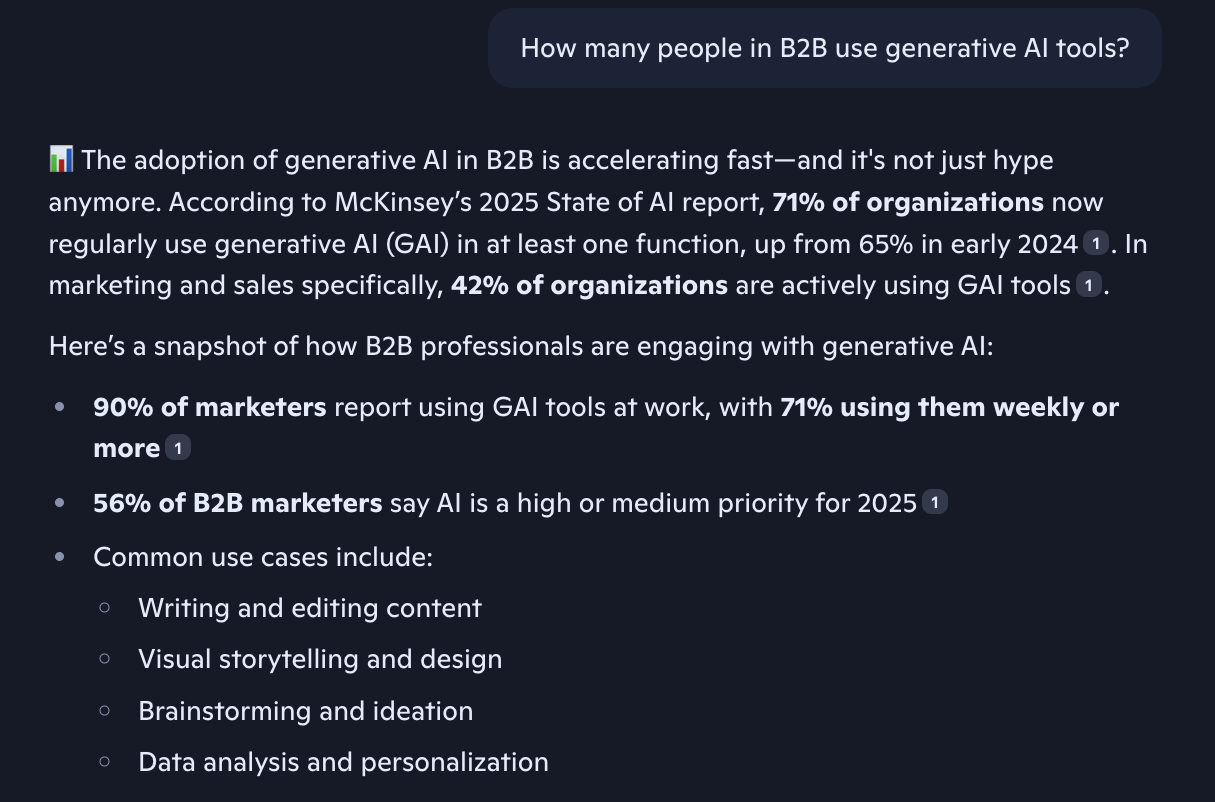

3. Grok

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: Referenced real-time posts and research snippets. Included sources at the end.

Takeaway: Grok becomes more transparent when data credibility is at stake.

4. Claude

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: Cited 3–4 recent studies, mostly from Gartner and Deloitte. Listed links clearly.

Takeaway: Switched from closed response to fully referenced when asked for stats.

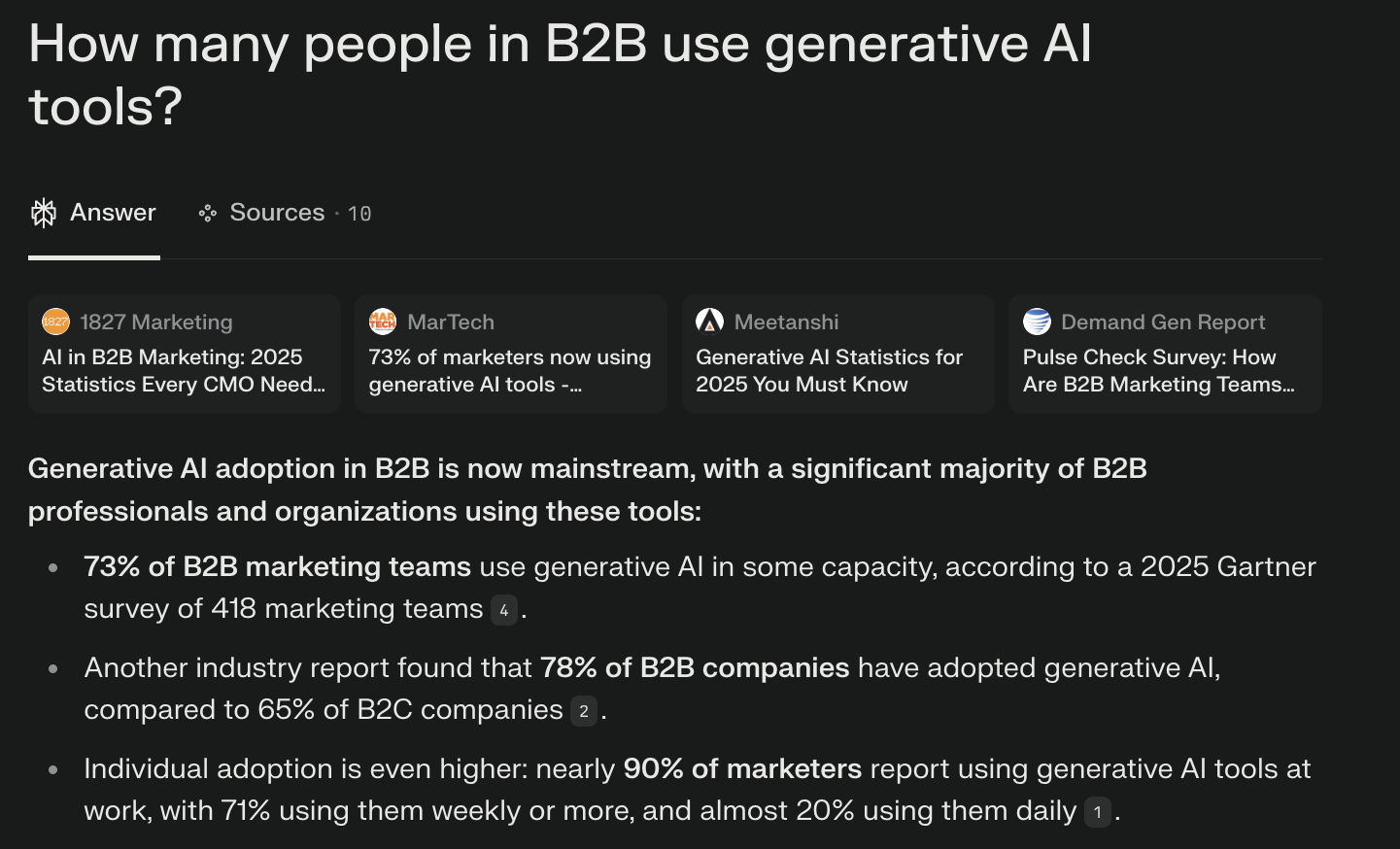

5. Perplexity

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: Strongest of all. Listed five sources with dates and clickable references.

Takeaway: Perplexity treats every stat like a search result. Reliable.

6. Microsoft Copilot

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: For the first time, Copilot cited sources. They were shown along with the response.

Takeaway: Even Copilot can’t ignore citation responsibility when numbers are involved.

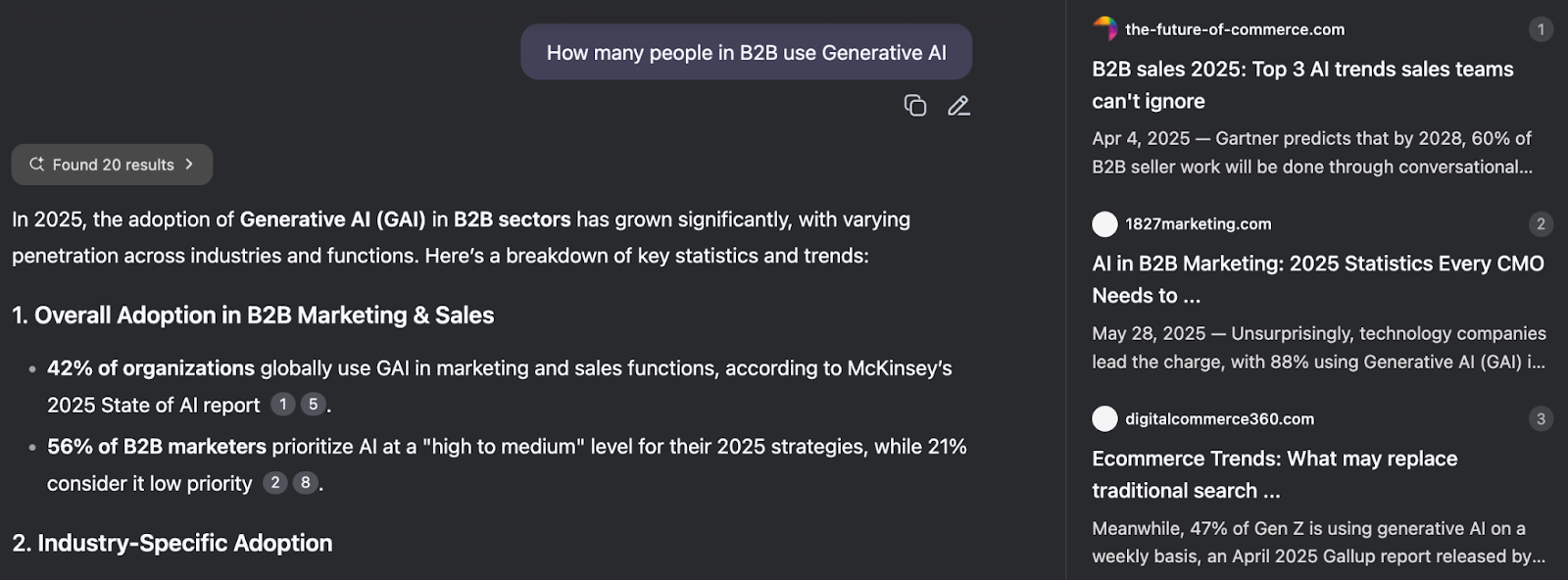

7. DeepSeek

Prompt: How many people in B2B use generative AI?

Citation: Yes

Behavior: Shared links to Chinese and English research papers and industry articles.

Takeaway: Maintained consistency with prompt type. Solid showing.

Here’s the quick summary of citation pattern for “statistics” prompt:-

This was the only prompt where every tool cited sources.

Key insight: When numbers are involved, citations become unavoidable. AI tools don’t want to get caught making up data, especially not in enterprise use cases.

Prompt 5: Write Me a 300-Word Article on B2B Marketing

This was the most “content generation” style task-based prompt of all five. It mimics how founders, marketers, and freelancers often use AI today to quickly generate drafts, intros, or short-form articles.

I wasn’t expecting much citation behavior here but I still wanted to test if any tool acknowledged sources when creating something original.

Here’s how each one responded.

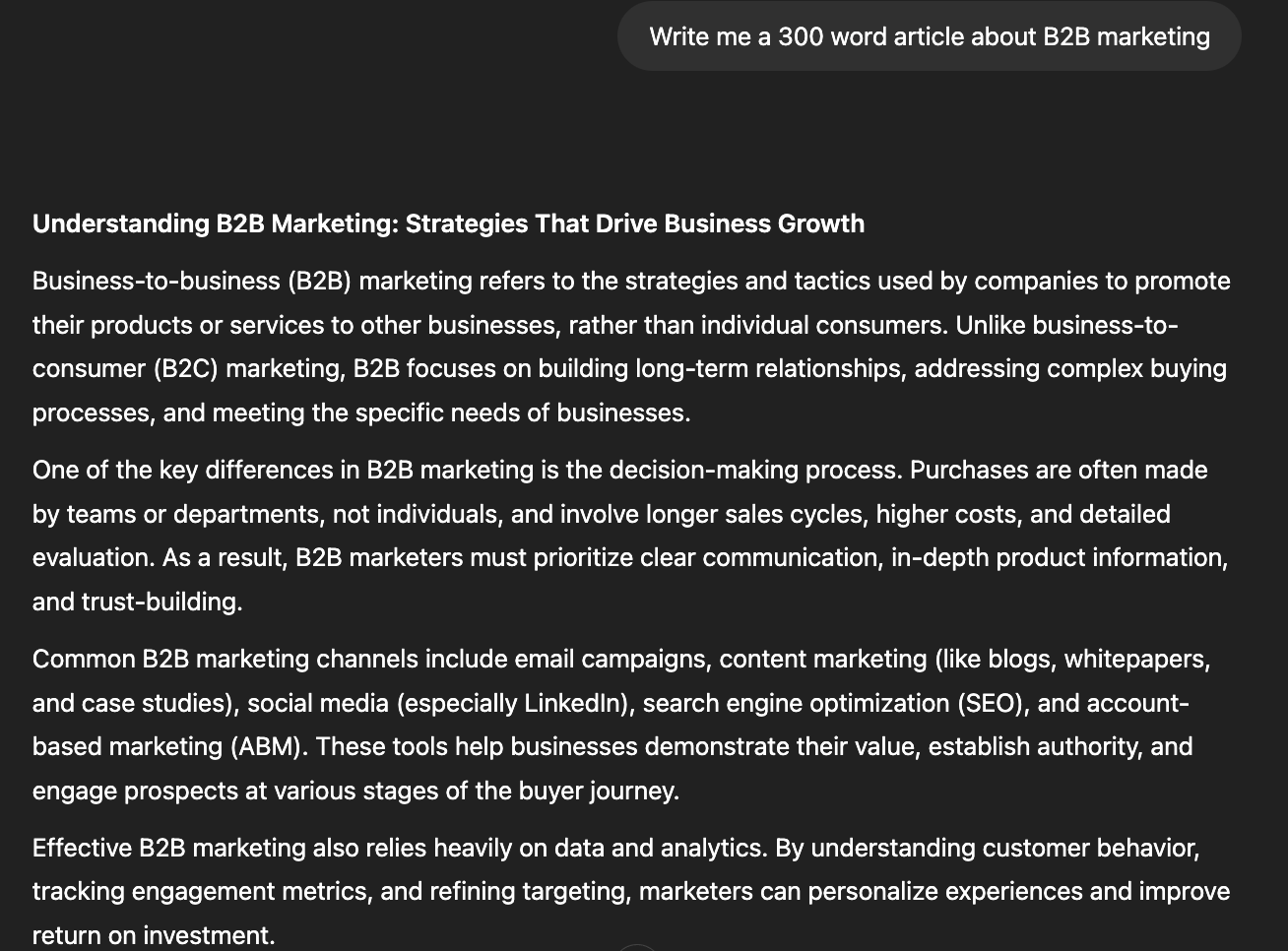

1. ChatGPT

Prompt: Write me a 300-word article on B2B marketing

Citation: No

Behavior: Generated a full article with an introduction, body, and conclusion. Polished tone, no links or references included.

Takeaway: Pure generation mode. No attribution, no mention of where facts or stats came from.

2. Gemini

Prompt: Write me a 300-word article on B2B marketing

Citation: Yes

Behavior: Included 2–3 clickable citations below the generated content. Links pointed to marketing blogs and Google-indexed sources.

Takeaway: Stands out by still offering citations even when asked to write content, possibly due to deeper Google integration.

3. Grok

Prompt: Write me a 300-word article on B2B marketing

Citation: No

Behavior: Generated a concise blog-style post. Focused on voice and brevity. No sources or footnotes included.

Takeaway: Treats task prompts as creative, not factual—no citations provided.

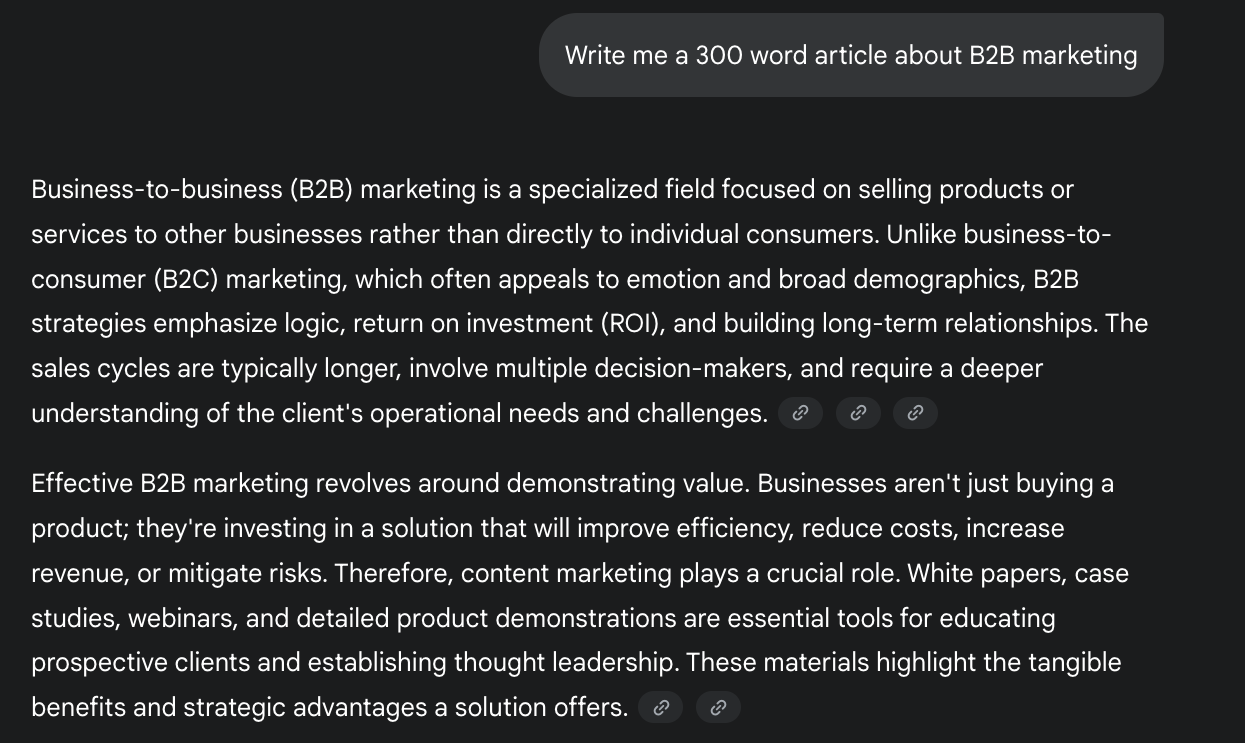

4. Claude

Prompt: Write me a 300-word article on B2B marketing

Citation: No

Behavior: Delivered a well-structured article with clear segmentation and professional tone. No references given.

Takeaway: Claude takes the prompt literally: write something, don’t research something.

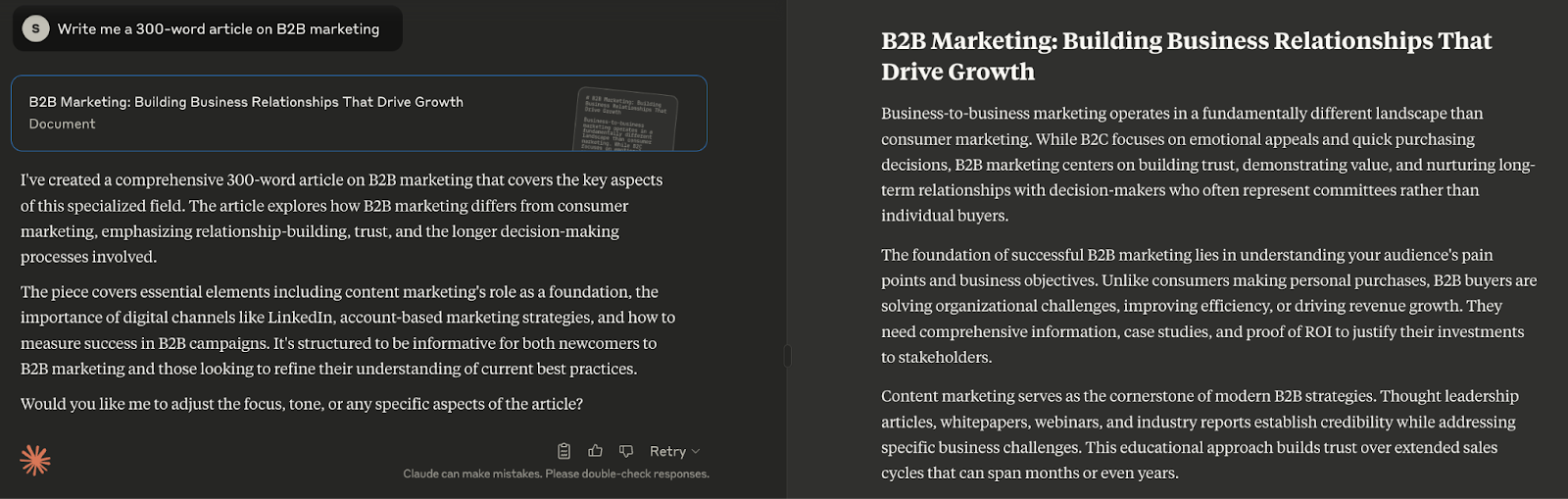

5. Perplexity

Prompt: Write me a 300-word article on B2B marketing

Citation: Yes

Behavior: Cited multiple sources below the article, mostly from marketing blogs, SEO guides, and thought leadership sites.

Takeaway: Even in generation mode, Perplexity behaves like a research assistant.

6. Microsoft Copilot

Prompt: Write me a 300-word article on B2B marketing

Citation: No.

Behavior: Generated a basic post with no links or source attribution.

Takeaway: Copilot sticks to creation mode without pulling references—even though it draws from Bing.

7. DeepSeek

Prompt: Write me a 300-word article on B2B marketing

Citation: No

Behavior: Wrote a fluent short article in one block. No citations or link suggestions offered.

Takeaway: For DeepSeek, generation ≠ research.

Here’s the quick summary of citation pattern for “task” prompt:-

Only Gemini and Perplexity cited sources when asked to generate original content.

What’s interesting is that some tools, which cited earlier, stopped citing here. When you switch from “ask and learn” to “write and create,” most tools flip into self-contained mode.

Finally, How do AI Tools Cite Sources?

Now that the test is complete, let’s recap how each tool behaved across all five prompt types, and what that means for your content strategy.

Here’s quick summary of patterns worth noticing:-

- Perplexity is the only tool that cited sources in all five prompts.

It behaves like a citation-first search engine, regardless of task type. - Statistical prompts triggered the most consistent citations.

Every tool cited sources when numbers were involved. This was the only prompt where Copilot and ChatGPT showed citations. - Most tools skipped citations on task-based prompts.

When asked to “write an article,” tools shifted to generation mode—even if the content was factual. - Gemini and Claude behave inconsistently.

They cited in 3/5 cases, but skipped prompts where you might expect sources (e.g. process frameworks). - Grok only cited when the prompt referenced brands or data.

It tends to treat open-ended or “opinion-style” questions as conversational, not research-driven. - DeepSeek behaves more like a search aggregator than a pure LLM.

Especially strong on process and product queries—weak on task prompts.

So, Do Citations Matter?

They do, but not the way they used to.

In traditional SEO, your goal was to earn clicks. In AI search, your goal is to get mentioned, summarized, or linked, even if nobody clicks.

Buyers may never visit your site. But your narrative might still shape their decision, if you show up in the right AI answers.

What This Means for Marketers: Visibility ≠ Clicks Anymore

Traditional SEO rewarded one thing: click-throughs. If your blog ranked on Google’s Page 1, you had a chance to convert that traffic.

But AI tools don’t work that way.

They don’t always show your link. They don’t always cite your site. And in many cases, they summarize your content without ever sending traffic back.

So if you’re still measuring success by organic traffic alone, you’re missing the bigger picture.

The New Visibility Stack for Generative AI Search

To stay visible in this new era, you need to optimize across three layers:

1. Indexability

- Are your pages being crawled and stored in sources that AI tools trust (Google, Bing, Perplexity’s index)?

- Do you have structured data (schema.org, Open Graph tags)?

- Is your content being referenced on third-party platforms (Quora, Reddit, news)?

2. Contextual Relevance

- Are your brand or product names showing up in content that AI models are summarizing?

- Is your value prop clear enough that an AI tool can extract and rephrase it accurately?

3. Generative Framing

- Are you writing in a way that makes it easy for AI tools to summarize you?

- Are your stats, frameworks, and conclusions AI-friendly (short, declarative, logically chunked)?

Should You Chase Citations?

Not directly. Most users don’t click them anyway, especially inside tools like ChatGPT or Claude.

But you should absolutely:

- Make your content easily attributable (use brand names, author bios, dates)

- Seed your insights into communities and open platforms that AI tools scan

- Publish in formats that surface in search-like tools (lists, FAQs, summaries)

Perplexity, Gemini, and DeepSeek are already pulling from those structures. The rest will follow.

What This Means for Content Teams

You don’t need to write more, you need to write smarter.

- Target AI discovery, not just search volume

- Create summary-ready content that answers with clarity

- Focus on brand imprint, even if you never get a backlink

TL;DR — What You Should Do Differently Starting Today

You’re not losing visibility because you’re not ranking.

You’re losing it because you’re not being mentioned, cited, or summarized inside the tools your buyers are already using.

Here’s what to take away from this experiment:

- Only Perplexity consistently cites across all prompt types.

- Statistics prompts trigger the most citations across tools.

- Definition or task-based prompts almost never cite sources.

- Being mentioned inside an AI-generated response is often more powerful than being linked.

Here are 5 Things B2B SaaS Marketers Should Start Doing to crack AI-native discoverability:-

1. Prioritize Structured Content That’s Easy to Summarize

- Use bold headers, numbered lists, and FAQs.

- Make your conclusions easy for machines to lift.

2. Get Referenced on Trusted, Crawled Platforms

- Don’t rely solely on your blog. Aim for community posts, roundups, interviews, and guest articles.

- Tools like Gemini and Perplexity often cite sources from Reddit, Medium, and industry review sites.

3. Treat Perplexity Like the New SEO Frontier

- It behaves like a search engine but cites better than Google.

- Reverse-engineer what it shows for your category—and be part of that conversation.

4. Use Your Brand and Author Names Intentionally

- AI models often repeat branded phrasing they’ve seen consistently.

- Make it easy for them to connect your message to your name.

5. Monitor AI Visibility Not Just Traffic

- Tools like Ahrefs now show whether your site is being cited in AI tools.

- Treat those citations as early signs of influence, even if they don’t drive traffic yet.

Frequently Asked Questions

Should I optimize my content for AI tools the same way I do for Google?

Not exactly. Google SEO still matters but AI tools often pull from different sources (like Forums or Reddit threads) and use different intent logic. You should optimize for clarity, citation-worthiness, and context, not just keyword targeting.

Why don’t most AI tools cite sources when generating content?

Because they treat generation as synthesis, not research. Unless prompted for facts, data, or comparisons, most tools see no need to cite. They’re trying to complete your task, not justify their response.

If users rarely click on citations, do they even matter?

Yes. While click-through rates may be low, being cited or mentioned builds brand trust, category association, and perception of authority—especially when tools like Perplexity or Gemini surface your name repeatedly across answers.

Can I track which AI tools are citing my content?

Yes. Ahrefs now includes an AI Citations report, and Perplexity shows inline references that can be tracked manually. Also monitor brand mentions on web properties like Reddit, Quora, LinkedIn, and third-party blogs.

What content formats are more likely to be cited?

Tools tend to cite:

- Lists and rankings (e.g. “Top 5 B2B CRMs”)

- Product comparisons

- How-to guides and frameworks

- Recent statistics with sources

- Authoritative opinion pieces with unique POVs

Is generative AI search replacing traditional SEO?

Not yet. But it’s eroding the top-of-funnel traffic that used to land on blogs and resource pages. AI tools now provide instant summaries where Google used to serve you clicks. That’s why, you should treat traditional SEO and AI discoverability as parallel efforts, not replacements.

How do I get my content to appear in Perplexity, Gemini, or Claude?

There’s no direct submission, but here’s what helps:

- Publish on domains that get crawled frequently (your site, Medium, Substack, Quora)

- Use schema markup

- Get mentioned by other sources AI tools already trust

- Keep titles and intros declarative, summary-friendly, and fact-rich